NERDIO GUIDE

NerdioCon 2026: Don’t miss out! Lock in your spot early and save — this is the IT event of the year.

Register Now

NERDIO GUIDE

Amol Dalvi | July 28, 2025

AVD auto-scaling is a critical practice for managing your Azure Virtual Desktop environment. It dynamically adjusts the number of active session host VMs to precisely match user demand in real time. By using automation to power on resources during work hours and power them off when idle, you can significantly reduce your Azure cloud costs.

This ensures you only pay for what you use, while also guaranteeing your users have the resources they need for a fast, responsive desktop experience, preventing performance bottlenecks and ensuring immediate availability.

Before diving into specific best practices, it's important to understand what AVD auto-scaling is and the core problem it solves. This practice involves using automated rules and schedules to dynamically increase or decrease the number of session host virtual machines (VMs) in your AVD host pools.

The reason this is so critical comes down to the fundamental nature of the public cloud and the dual goals of every IT department:

Ultimately, implementing a robust auto-scaling strategy is not just a minor optimization—it's a foundational component for running an AVD environment that is both financially efficient and highly performant for your users.

An effective auto-scaling strategy is built on fundamental principles that balance cost control with a seamless user experience. Understanding these core concepts allows you to build a framework that is both efficient and reliable.

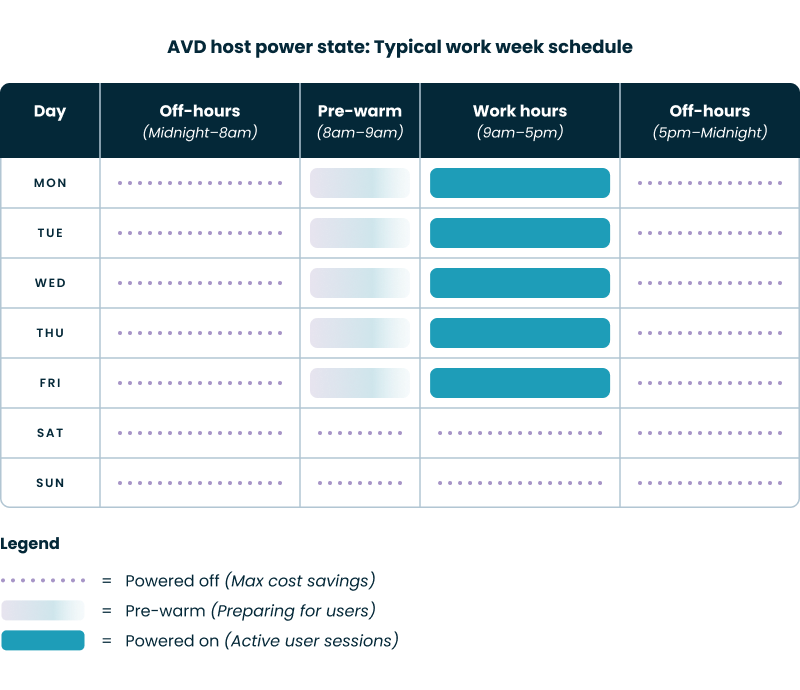

The most fundamental best practice is to ensure your AVD resources are only running when they are actually needed. This requires aligning your VM schedules with your organization's real-world work patterns.

Implementing granular, time-zone-aware schedules manually can be complex. Management platforms like Nerdio Manager for Enterprise allow you to create and apply these precise schedules through a simple interface, removing the need for custom scripting.

Auto-scaling is a constant balancing act between two competing goals: minimizing cost and maximizing performance.

An optimal strategy avoids extremes. It maintains a small buffer of available resources to absorb unexpected logins but aggressively scales in during periods of inactivity.

You can scale your environment based on different types of triggers, and the most effective strategies use a hybrid approach.

A best-practice strategy combines both. You use proactive schedules to build your baseline capacity for the day and then overlay reactive scaling to handle unexpected surges in demand. For example, platforms like Nerdio Manager for Enterprise use a hybrid engine that proactively prepares capacity for the workday and then reactively adds or removes hosts based on real-time session usage, giving you the best of both worlds.

Once you understand the principles, you must choose the right technical methods for scaling your hosts. These methodologies determine how your environment expands and contracts to handle user sessions.

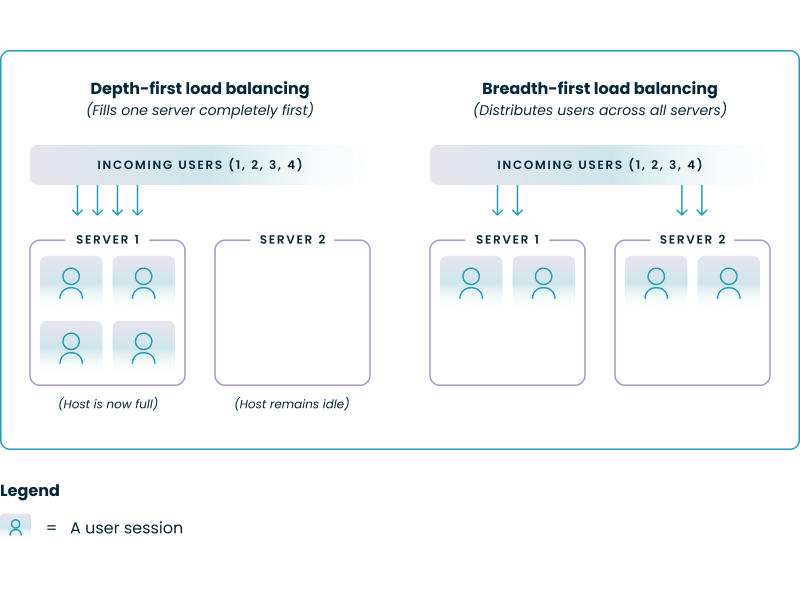

This refers to how new user sessions are distributed across the active hosts in your host pool.

There is no single "best" method; it depends on your priority. AVD's native capabilities default to breadth-first. A best practice is to have the flexibility to choose, which management platforms like Nerdio Manager provide through a simple configuration setting, allowing you to switch between depth-first for cost savings or breadth-first for performance-sensitive user groups.

This is the most common form of scaling, also known as horizontal scaling.

This is the primary mechanism you will use to match capacity to user load throughout the day.

This is a more advanced technique known as vertical scaling, where you change the size of the VMs themselves.

While not as common as horizontal scaling, this can be a powerful tool for environments with highly variable performance requirements. For example, a development VDI environment could use larger VMs during the day for compiling code and automatically scale down to smaller, cheaper VMs overnight. Manually scripting this is complex, but the functionality can be automated within a platform like Nerdio Manager.

See how you can optimize processes, improve security, increase reliability, and save up to 70% on Microsoft Azure costs.

Beyond basic scaling, advanced automation techniques can unlock further cost savings and improve operational security and efficiency.

For session hosts that use Premium SSDs for high performance during the user session, you are still paying for that expensive storage even when the VM is deallocated.

An advanced best practice is to automate disk-type switching. When the auto-scaler deallocates a VM after hours, it can also trigger a process to change its OS disk from a Premium SSD to a low-cost Standard HDD. Before the VM is powered back on in the morning, the process is reversed. This has no impact on user-perceived performance but can significantly reduce your monthly storage costs. This functionality is complex to script but is an integrated, one-click feature in optimization platforms like Nerdio Manager for Enterprise.

The performance and security of your AVD environment depend on the health of your golden image. A best practice is to regularly update your image with the latest Windows updates, application patches, and security configurations.

Automating this process ensures consistency and reduces manual labor. A complete automation workflow includes:

Platforms like Nerdio integrate image management directly into the AVD lifecycle, allowing you to schedule these updates and deployments automatically.

This demo shows how you can optimize processes, improve security, increase reliability, and save up to 70% on AVD costs.

You cannot optimize what you cannot measure. A final best practice is to continuously monitor key metrics to validate and refine your auto-scaling policies. A crucial part of this process involves a regular audit to identify and de-provision orphaned or unused virtual desktops, which prevents the accumulation of unnecessary compute and storage costs over time.

This data is vital for effective cloud cost management, which involves the continuous process of monitoring, analyzing, and optimizing your cloud spending to maximize business value. While Azure provides many of these metrics, a dedicated management platform often provides a consolidated view. For example, Nerdio Manager includes built-in dashboards that translate your auto-scale activity directly into a "Billed vs. Potential Cost" report, giving you a clear, tangible view of the ROI of your optimization efforts.

See how you can optimize processes, improve security, increase reliability, and save up to 70% on Microsoft Azure costs.

Implementation requires careful configuration to ensure the automation is both effective and non-disruptive to your users. A unified management platform can simplify these steps significantly.

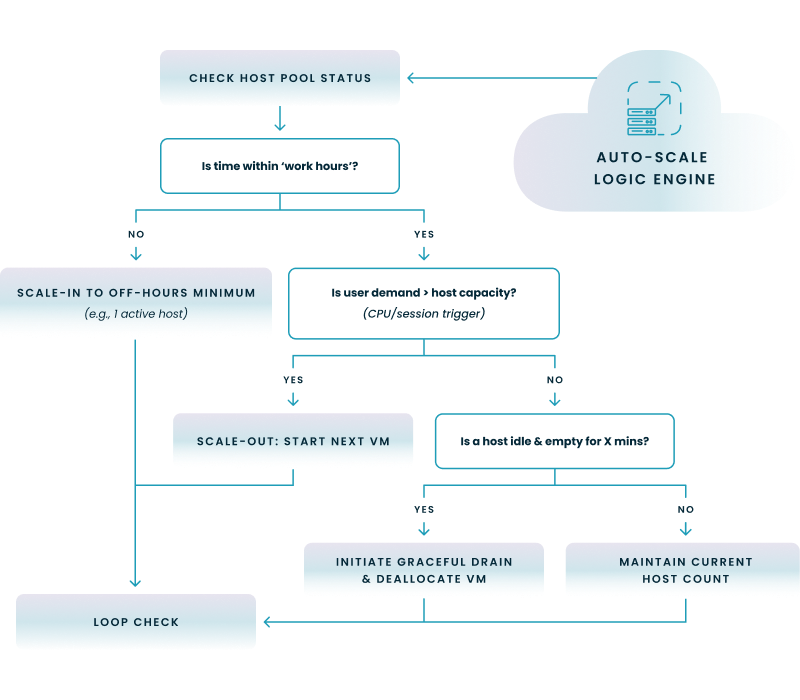

The flowchart below provides a visual map of the end-to-end logic behind a best-practice auto-scaling implementation. It shows how an advanced engine combines schedules, responds to real-time user demand, and handles idle resources gracefully. The following subsections will explore the key components of this process in greater detail, including configuring standby hosts and managing user sessions during scale-in operations.

To avoid making users wait for a VM to start, a best practice is to always maintain a buffer of available, running hosts during business hours.

While this can be scripted, it's easier to manage in a platform like Nerdio Manager, where setting the "Minimum Active Hosts" is a simple input field within the auto-scale configuration.

The cardinal rule of scaling in is to never forcibly log off a user with an active session. A graceful shutdown process is a critical best practice.

This graceful process is essential for user trust and data integrity. Nerdio's auto-scale engine has this logic built-in, automatically managing drain mode and handling disconnected sessions according to administrator-defined policies to ensure shutdowns are never disruptive.

Applying distinct policies is a key part of effective resource management, which ensures that compute power is allocated efficiently across the organization according to specific departmental needs and priorities. This same principle applies to software entitlements, where a strategy to effectively manage and assign licenses is crucial to minimize waste and ensure compliance. Different user groups have different needs. Your finance department may work a standard 9-to-5 schedule, while your IT support team may need 24/7 availability.

A best practice is to create separate auto-scaling policies for each distinct user group or host pool. Manually, this can be done by using Azure tags to identify which VMs belong to which group and adding complex logic to your scripts. A more efficient approach is to use a management platform that allows you to create named policies (e.g., "Finance Dept Schedule," "Power User Performance") and apply them to different host pools through a graphical user interface.

This table compares two distinct auto-scaling profiles to illustrate how you can tailor policies for different user groups, balancing cost for standard users against performance for power users.

| Setting / Parameter | Finance Host Pool (Cost-Optimized Profile) | Developer Host Pool (Performance-Optimized Profile) |

|---|---|---|

| Active Hours Schedule | 7:00 AM - 7:00 PM, Monday - Friday | 8:00 AM - 10:00 PM, Monday - Friday; 10:00 AM - 4:00 PM, Saturday |

| Load Balancing Method | Depth-First: Fills one host completely before using the next to maximize the number of idle hosts that can be shut down. | Breadth-First: Distributes users across all active hosts to ensure no single user experiences performance lag. |

| Minimum Active Hosts | 1 Host: A minimal buffer to handle initial logins while keeping baseline costs as low as possible. | 3 Hosts: A larger buffer to guarantee immediate resource availability for performance-sensitive tasks. |

| VM Size (SKU) | Standard_D4as_v5 (4 vCPU, 16 GiB RAM) | Standard_D8as_v5 (8 vCPU, 32 GiB RAM) |

| Disconnected Session Timeout | Log off after 60 minutes of inactivity to free up resources quickly. | Log off after 240 minutes of inactivity to support long-running processes and builds. |

| Storage Auto-Scaling | Enabled: Automatically switches OS Disks from Premium SSD to Standard HDD when VMs are off to reduce storage costs. | Disabled: Keeps Premium SSDs active at all times to ensure the absolute fastest VM start times when needed. |

While often used interchangeably, "Autoscale" in Azure typically refers to the robust Azure Monitor feature that lets you define complex rules for scaling resources based on performance metrics or a fixed schedule. "Automatic scaling" is a simpler, specific feature for Azure App Service that automatically handles scaling based on incoming HTTP traffic without needing manually configured rules. Essentially, Autoscale provides deep, rule-based control, while Automatic Scaling offers a simpler, traffic-based automation for web apps.

Auto scaling is a cloud computing feature that automatically adjusts the number of compute resources allocated to an application based on its current needs. You can auto scale a service in two primary ways: by creating rules that react to real-time performance metrics (like CPU usage crossing 75%), or by setting a schedule to add and remove resources at specific times (like adding servers at 9 AM on weekdays). These actions ensure performance during high demand and save costs during quiet periods.

The single most important reason to use automated scaling for Azure Virtual Desktop (AVD) is to dramatically reduce costs. AVD environments are often only used during business hours, and auto scaling allows you to automatically shut down and deallocate virtual machines during nights and weekends. This ensures you only pay for the compute resources when they are actively needed, preventing significant waste.

The two most popular methods for auto scaling in Azure are using Azure Monitor Autoscale and creating automation with Azure Functions or Azure Automation. Azure Monitor Autoscale is the native, built-in way to scale resources like Virtual Machine Scale Sets and App Services based on metrics or schedules. For more complex scenarios, like AVD or custom applications, users often leverage Azure Automation runbooks or lightweight Azure Functions to script precise start/stop actions.

Software product executive and Head of Product at Nerdio, with 15+ years leading engineering teams and 9+ years growing a successful software startup to 20+ employees. A 3x startup founder and angel investor, with deep expertise in Microsoft full stack development, cloud, and SaaS. Patent holder, Certified Scrum Master, and agile product leader.